Just a short update on the 1400CS restoration project. As of the last post from long ago, I had successfully swapped out the broken trackpad but started to have issues with certain keyboard keys not working. I ended up disassembling the PowerBook one more time and cleaning out the keyboard slot on the laptop's motherboard. I used a little bit rubbing alcohol and a soft lint cloth to clean out the slot as well as sprayed it with a can of compressed air for good measure. Either way, those two things seemed to have done the trick! All keys are functional and I am officially stoked.

Once I was able to restore all functionality to the keyboard I started to research what kinds of PCMCIA Ethernet cards would work with the PowerBook. To make a long story short, I eventually learned about a particular card and adapter that would work with the 1400CS PowerBook. I purchased an Asante FriendlyNet Ethernet PCMCIA/Cardbus Adapter for PC and Mac on eBay for a measly $7.00. The immediate mark of compatibility on the included box brought my hopes up. I read through the included documentation, ran through the included installer and installed the necessary extension into my system's Extensions folder, but still no go, even after a reboot. Honestly, I have no idea of what I am doing as this is my first real go with an older version of Mac OS. I have also purchased a Transcend PCMCIA ATA Adapter that should convert a CompactFlash CF Card to work in the other PCMCIA slot. We will see though.

Anyways, the previous owner of this old gem did not bother wiping the hard drive, so it is still running an old version of Mac OS 8 and includes software such as Photoshop, an ancient version of Microsoft Office for Mac, and VirtualPC to name a few. When I started up VirtualPC it booted a Windows 95 VM and quite quickly for that matter. Not bad for this old beast of a laptop.

Lastly, and sadly, there is another reoccurring issue with the laptop that has been more than a little frustrating and unusual. My current theory is a bad PRAM battery and why would it not be considering the PowerBook's age. Here is what happens. I will be happily computing away (and by computing I mean trying to learn Mac OS 8) and the machine will completely lock up on me. I am unable to power off the laptop in any way, shape, or form other than to pull the charging cable and battery out at which point the unit still seems to have some form of power and current flowing through it as the green light on the lower right-hand side of the display is still illuminated. Only for a couple of minutes though. At this point, I usually leave it to sit for a couple of hours and then it works again for awhile.

On that same note, I am still searching for a working internal CD drive to use to install a Linux OS, but if nothing else, I will search for and use an external one somehow. I see WeLoveMacs have some in stock still, but its hard to justify paying $150 for one. The PowerBook already has a floppy drive installed, so I suppose I could utilize that for a Linux OS install although the complexity increases tenfold when going that route and I do not exactly have a huge handful of floppy disk lying around to make it happen.

Thoughts, ideas, comments, suggestions on these issues? I would welcome any and all.

Tuesday, December 29, 2015

Tuesday, October 20, 2015

Useful Twerks, I mean Tweaks

I know. Another long hiatus of no posts. Life has been a whirlwind with my second daughter being born pre-mature as well as me preparing myself for graduate school come this spring 2016. Regardless, I want to keep posting here as often as I can. So today, I thought I would write up a small post with a couple of updates on what I have been working on and cover a couple of useful tweaks I have discovered I could make on my Debian systems to improve useability and productivity.

The first tweak is targeted at just the iBook and PowerBook owners. Out of the box, the scroll direction feels unnatural and counterintuitive. Luckily, I was able to discover what trackpad settings could be used to reverse the scroll direction. Once in place, you should be able to pull down on the page and have the browser scroll up and vice versa. To put this configuration in effect, open up your synaptics.conf file and add the following lines:

Option "VertScrollDelta" "-111"

Option "HorizScrollDelta" "-111" Once those two options have been added, reboot and enjoyed reversed scrolling.

The other tweaks I thought would be useful to share is the ability to spice up and add a bit of color to your bash shell. Add the following lines to either your

~/.bashrc file or /etc/bashrc.bash file.export LS_OPTIONS='--color=auto'

eval "`dircolors`"

alias ls='ls $LS_OPTIONS'To apply the changes, run the following command (assuming you edited the file in your home directory):

source ~/.bashrcWhy stop there though? If you are a heavy user of vim like myself, you have the ability to add highlighted syntax to your text editor. Browse to

/etc/vim/vimrc and uncomment the line below. You will need to make the edit as the root user if you do not already have root privileges.syntax onYep. That is it. The change is immediate.

These two colorful tweaks add a great amount of readibility within the terminal, especially the colored syntax highlights. Such a configuration makes it easy to spot typos within configuration files and code as well as pick out certain kinds of items with much more speed and agility.

In terms of new hardware, I have scored an ATI FireGL X3 graphics card with 256 MB of VRAM. This particular card is documented as being the second fastest graphics card available for G4 Quicksilvers. The fastest graphics card, the Nvidia GeForce 7800 GS, I also already own! Speaking of the Nvidia card, I have been able to get 2D acceleration working with the Nvidia Card as well using the procedure I explained in detail over at PowerPC Liberation, so hopefully I can post on that soon too. Before that though, I want to test the latest Rage 128 patch mentioned here to see what kind of performance I can manage with a nearly 15 year old card.

Speaking of graphics, a university also provided me with a couple of spare G4 towers and an older 17" Apple Studio Display with an ADC connector. When hooked up to my G4 Quicksilver project machine, it looks magnificent with its 1280x1024 resolution. The Apple Display Connector is a real bugger though as finder adapters for other display outputs is not as feasible and the Apple branded adapter costs well over $100.

Lastly, I also purchased some royal blue heatsinks for the QS's RAM and they look fantastic, but in terms of performance and temperature difference, I am not sure how they fare. Not only that, but I also scored a Sonnet Serial ATA PCI card for the G4 upgrade project. With that in mind, my next post should get us back on track with the Pimp My G4 Quicksilver project where I wrap things up with the CPU upgrade for the time being.

Wednesday, July 29, 2015

Pimp My Quicksilver - Part IV:B CPU

As promised, this particular post will be short and sweet. First off, I have had to drop the upgraded CPU clock speed back down to 1.33 GHz as so far it seems to be offering the most stability with both operating systems. Research in that department continues.

However, I was still allowed to move forward with the jump to a 32-bit SMP kernel within Debian. What follows is basically the 2 steps I had to take in order to do so. Even then it is nothing outrageous.

1. Run the following command to search for the most current and appropriate 32-bit SMP PPC kernel within the Debian repositories.

This should return a list of about 3 different kernel image packages as well as the corresponding meta-packages and headers for each of them. Look for the SMP image called

2. Install the SMP linux image. Right now the current version on my Debian Jessie install is 3.16.0-4.

After installing this image, I had no need to manually update my yaboot.conf file as that was done so automatically. Would not hurt to double check though to make sure the default kernel is now your SMP one.

3. Reboot

Reboot your machine to allow it to boot using the newly installed multiprocessor capable kernel. Once you are logged in, there are multiple ways you can verify your system is now taking advantage of multiple CPUs, but I will cover the ones I am more familiar with. The first command you can run will help you verify that your system is seeing both CPUs.

Here are the results of mine:

Okay. Both CPUs are recognized, but are both being utilized by the running system? This is where the command line tool

Since both CPUs appear here with a % of use, we can be assured that both CPUs are being fully utilized by Debian. Great. How about we take it even one step further by looking at which CPU a particular process is running on? That would provide even more assurance and give us an idea of how and what work is divided up amongst the two CPUs. To do this, will need to use the

Look for the PSR column, which stands for processor. In our case, the resulting number will either be a 0 or a 1 since we only have 2 CPUs and numbering always starts at 0 in computer science. Here is a screenshot hightlighting the command running on the QS.

If you are curious, the

That is it for this post. I barely scratched the surface of what the

However, I was still allowed to move forward with the jump to a 32-bit SMP kernel within Debian. What follows is basically the 2 steps I had to take in order to do so. Even then it is nothing outrageous.

1. Run the following command to search for the most current and appropriate 32-bit SMP PPC kernel within the Debian repositories.

sudo apt-cache search linux-imageThis should return a list of about 3 different kernel image packages as well as the corresponding meta-packages and headers for each of them. Look for the SMP image called

linux-image-[insert version here]-powerpc-smp. You do NOT want the powerpc64 version as no G4 PowerMac or CPU upgrade for it was ever 64-bit capable. I have left the kernel image version out as it may vary over time.2. Install the SMP linux image. Right now the current version on my Debian Jessie install is 3.16.0-4.

sudo apt-get install linux-image-3.16.0-4-powerpc-smpAfter installing this image, I had no need to manually update my yaboot.conf file as that was done so automatically. Would not hurt to double check though to make sure the default kernel is now your SMP one.

3. Reboot

Reboot your machine to allow it to boot using the newly installed multiprocessor capable kernel. Once you are logged in, there are multiple ways you can verify your system is now taking advantage of multiple CPUs, but I will cover the ones I am more familiar with. The first command you can run will help you verify that your system is seeing both CPUs.

cat /proc/cpuinfo.Here are the results of mine:

Okay. Both CPUs are recognized, but are both being utilized by the running system? This is where the command line tool

top can come in and provide this information. In a terminal, simply type top and you should see a great deal of information and usage statistics about your running system. You can type the 'h' key for help on different options you have at your disposal for arranging the layout of the information provided by top as well as what information is displayed. We want to hit the '1' key to provide usage statistics on separate CPUs as can be seen from this screenshot:Since both CPUs appear here with a % of use, we can be assured that both CPUs are being fully utilized by Debian. Great. How about we take it even one step further by looking at which CPU a particular process is running on? That would provide even more assurance and give us an idea of how and what work is divided up amongst the two CPUs. To do this, will need to use the

ps command line tool. This tool basically provides a snapshot of your system's running processes. Again, while in a terminal, type the following and hit Enter:ps -eFLook for the PSR column, which stands for processor. In our case, the resulting number will either be a 0 or a 1 since we only have 2 CPUs and numbering always starts at 0 in computer science. Here is a screenshot hightlighting the command running on the QS.

If you are curious, the

-e flag tells the system to include all processes and the -F flag stands for full format, which adds in some additional information to each listed process including the aforementioned PSR column.That is it for this post. I barely scratched the surface of what the

ps and top tools are capable of, so I would encourage to explore them further. All sorts of neat things are possible. The next (and last) post on the CPU upgrade will take a more in depth look at performance improvements in the system with more everyday tasks put to the test and some CPU spec comparisons.Tuesday, July 21, 2015

Pimp My Quicksilver - Part IV:A CPU

Welcome to part IV of the series on upgrading a G4 Quicksilver (QS). If you have stuck around this long, I think you will find that we will start to see some more substantial improvements in terms of overall performance with some of these next few upgrades. For this particular post, I will be focusing on upgrading the CPU. In fact, I will be breaking it down even further than that due to the fact that this particular upgrade has become quite a bit more work than I had originally anticipated. Needless to say, I have learned quite a bit along the way about jumpers, dip switches, voltage, etc.

I was excited about this upgrade as I knew it would do wonders for the QS and upfront I will state I was not disappointed, but more on that in a later post. The stock 7447 G4 Motorola CPU running at 800 MHz was just not cutting it even for tasks as simple as installing updates via apt-get in Debian. As I stated in an earlier post, I was lucky enough to locate a dual 1.4 GHz Giga Designs 7457 CPU upgrade on eBay for quite cheap. In fact, I have been tracking a large number of single and dual CPU upgrades for G4 PowerMacs on eBay these past couple of months, so if you are on the hunt for one, definitely take advantage of this fact as they can sometimes sell quick! Not only that, but these upgrade gems are becoming harder and harder to come by.

Consider this post the first of 3 regarding the upgrade and the processes and procedures it involved. Part IV:A will cover that actual hardware side of the upgrade including the steps taken to swap out the CPU and the different voltages/jumper and dip switch settings I needed to set. Part IV:B will cover the upgrades needed to jump from a single CPU PowerPC kernel to a 32-bit SMP PowerPC kernel within Debian and how to verify it was successful afterwards. And finally, Part IV:C will cover some basic benchmarks from OS X and Debian both to provide a bit of an idea of just how much faster the machine has become.

So onto the upgrade. The easiest part of this whole process was the steps to take out the old stock CPU and swap in the M5D-1214U Giga Designs dual 1.4 GHz 7457 CPU, which happened to be the first thing I did not really knowing what I was up against. I followed the steps from iFixit's website for swapping out the CPU here. The new dual processor CPU also has the luxury of having dual fans built on top of it for cooling, so I was able to permanently remove the small 60mm fan that resided behind the old CPU. I would be able to leave it there with the new one, but I will closely monitor temperatures with and without it. This particular upgrade does not require all 4 of the screws you removed from the old CPU, but only 2 of them, but keep the other ones handy just in case. Once I successfully swapped out the CPU and plugged in power to its fans, I closed the G4 up and tried to boot the machine. Sadly, nothing. No boot up chime, bong, whatever you want to call it. I probably pushed the power button 6 or 7 times just hoping it would take off, but that would be too easy.

Long story short, my system needed a particular firmware upgrade that would add support for the 7457 CPU series. Finding this particular upgrade version proved to be incredibly difficult to find as well figuring out which particular version I needed. I first posted to Apple's discussion forums asking anybody if they had any version of the installer laying around they could email me. A user did respond with a link to the Mac Driver Museum that is hosted over at Yahoo that held a few different Giga Designs pieces of software and upgrades under the folder appropriately named CPU Upgrades. You will need a Yahoo account to access these files, which is a bit of a pain in my mind, but I ponied up and created an account. At the time, the only firmware updater version available was 2.0.1. Looking back on things now I see the description says it adds support for 7447A CPUs, which was not was I was upgrading to, but that small tidbit did not click with me at the time.

I downloaded the disk image, extracted it, and copied the files to a separate folder for safe keeping. The firmware update utility, Giga Designs 7447A Updater, was straightforward enough. Here is a screenshot of the main screen you will see during the upgrade.

The default selection of "Install 7447A Firmware Update" is what you would want if you were actually needing this particular upgrade. When you are ready, hit the Continue button and let the software do its magic as I did.

After running this firmware upgrade and rebooting, I again swapped out the CPU, but still no sign of any life. I did some more research online and decided it might be because I needed firmware installer version 3.0.1. I mentioned this in the same Apple Support community discussion I had already started and the same individual who commented previously pointed me to a discussion thread at MacGurus.com where somebody asked for version 3.0.1 as well, which I learned added support for the 7457 CPU upgrades. Bingo!

I responded to the thread asking if anybody would be able to provide me with the same firmware update and within 48 hours I received a personal email from one of the moderators with the update utility included as an attachment. Before I did anything with this version of the updater, I uploaded this piece of software to the Mac Driver Museum file repository for anybody else ever needing it in the future.

To run this particular upgrade, you can simply extract the files from the .sit archive and run the lone Giga Designs Updater 3.0.1 package. Here is a quick screenshot of the installer. As straightforward as can be, so I will not cover it step-by-step.

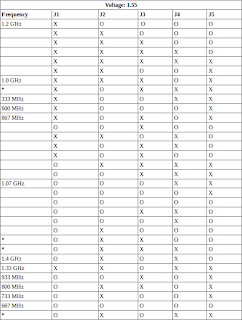

Unfortunately, and frustratingly, still no signs of life after booting up the machine with the Giga Designs CPU in place and freshly installed firmware update. However, I remembered reading a bit more from that same MacGurus thread about different voltage settings you could set on the CPU. Here is a table summarizing those different voltages and how to obtain them.

I thought perhaps the voltage was not set correctly and sure enough, the current setup I had for J6 - J10 was not in the table above. I decided to start with a voltage of 1.55 as 1.6 and 1.65 seemed a bit extreme for a noob like myself. This is where I realized and learned there are also dip switches for J1 - J5 that controlled the CPU's clock frequency. So this meant I would have to also play around with those as well to see which combination of the 5 would give me 1.4 GHz. After scouring the web for some time, I gave up the search for any sort of table showing possible combinations and resulting frequencies. If anybody reading this has this information available, please (I cannot say it enough) share!

Since I was not able to obtain this information, I decided to starting building a list of tables with this information myself. There are still many missing results and a lot of unanswered questions I have, but it is a worthy start. Knowing that each of the 5 dip switches had 2 possible states (either on or off) I could easily calculate the total number of possibilities as 2^5 or 32. Yes, that is basically binary numbers and mathematics at its finest. And with 7 different voltages to work with, I could theoretically have up to 224 possible combinations of states between J1 - J10 that could provide me with a working system and CPU. For now, I have only included my results for 1.55v, but eventually, I will link to a PDF showing results for all voltages along with notes regarding missing values, etc. I I have read that some frequency results may not be readable by OS X, but require an additional program called GigaMeter, which is available at the Mac Driver Museum as well, to show you what your CPU's clock speed is.

As you can see from the table above I have figured out what combination provides the full 1.4 GHz, but this was one of the results that required the GigaMeter KEXT to be installed otherwise the speed was reported as 0 MHz in OS X. On the other hand, Debian still reports 0 for clock speed, but neither OS has crashed on me yet so the stability is there. I will see if I can find anything online on to correct it within Linux. Some results produced kernel panics with both OS X and Debian and these are marked with an asterisk. Also marked with an asterisk are combinations that successfully produced a start-up chime but would not boot any further. And finally, as you probably have guessed, the blanks are combinations that produced nothing at all (i.e. no startup chime, boot up, etc).

Part of me wonders if I even needed the firmware upgrade for my QS, which was also mentioned by a moderator in the MacGuru's thread linked above. Either way, the CPU is still in place and running wonderfully at least on OS X Leopard. This conclusion will bring us to my next post in this series where I upgrade my Debian install to use a symmetric multiprocessing (SMP) kernel so it takes advantage of both CPUs for even better performance. Do not fret, this next post will be a hell of a lot shorter. :)

P.S. If you have any other additional info you can provide regarding the Giga Designs upgrades, feel free to leave comments below or shoot me a private email and I can add it to this post. I would love to make this process much simpler for those in the future. The Giga Designs company is now defunct, their website is gone, and the Way Back Machine could not help me locate the information I needed most as the necessary information used to be hosted in PDF form on their site.

I was excited about this upgrade as I knew it would do wonders for the QS and upfront I will state I was not disappointed, but more on that in a later post. The stock 7447 G4 Motorola CPU running at 800 MHz was just not cutting it even for tasks as simple as installing updates via apt-get in Debian. As I stated in an earlier post, I was lucky enough to locate a dual 1.4 GHz Giga Designs 7457 CPU upgrade on eBay for quite cheap. In fact, I have been tracking a large number of single and dual CPU upgrades for G4 PowerMacs on eBay these past couple of months, so if you are on the hunt for one, definitely take advantage of this fact as they can sometimes sell quick! Not only that, but these upgrade gems are becoming harder and harder to come by.

Consider this post the first of 3 regarding the upgrade and the processes and procedures it involved. Part IV:A will cover that actual hardware side of the upgrade including the steps taken to swap out the CPU and the different voltages/jumper and dip switch settings I needed to set. Part IV:B will cover the upgrades needed to jump from a single CPU PowerPC kernel to a 32-bit SMP PowerPC kernel within Debian and how to verify it was successful afterwards. And finally, Part IV:C will cover some basic benchmarks from OS X and Debian both to provide a bit of an idea of just how much faster the machine has become.

So onto the upgrade. The easiest part of this whole process was the steps to take out the old stock CPU and swap in the M5D-1214U Giga Designs dual 1.4 GHz 7457 CPU, which happened to be the first thing I did not really knowing what I was up against. I followed the steps from iFixit's website for swapping out the CPU here. The new dual processor CPU also has the luxury of having dual fans built on top of it for cooling, so I was able to permanently remove the small 60mm fan that resided behind the old CPU. I would be able to leave it there with the new one, but I will closely monitor temperatures with and without it. This particular upgrade does not require all 4 of the screws you removed from the old CPU, but only 2 of them, but keep the other ones handy just in case. Once I successfully swapped out the CPU and plugged in power to its fans, I closed the G4 up and tried to boot the machine. Sadly, nothing. No boot up chime, bong, whatever you want to call it. I probably pushed the power button 6 or 7 times just hoping it would take off, but that would be too easy.

Long story short, my system needed a particular firmware upgrade that would add support for the 7457 CPU series. Finding this particular upgrade version proved to be incredibly difficult to find as well figuring out which particular version I needed. I first posted to Apple's discussion forums asking anybody if they had any version of the installer laying around they could email me. A user did respond with a link to the Mac Driver Museum that is hosted over at Yahoo that held a few different Giga Designs pieces of software and upgrades under the folder appropriately named CPU Upgrades. You will need a Yahoo account to access these files, which is a bit of a pain in my mind, but I ponied up and created an account. At the time, the only firmware updater version available was 2.0.1. Looking back on things now I see the description says it adds support for 7447A CPUs, which was not was I was upgrading to, but that small tidbit did not click with me at the time.

I downloaded the disk image, extracted it, and copied the files to a separate folder for safe keeping. The firmware update utility, Giga Designs 7447A Updater, was straightforward enough. Here is a screenshot of the main screen you will see during the upgrade.

The default selection of "Install 7447A Firmware Update" is what you would want if you were actually needing this particular upgrade. When you are ready, hit the Continue button and let the software do its magic as I did.

After running this firmware upgrade and rebooting, I again swapped out the CPU, but still no sign of any life. I did some more research online and decided it might be because I needed firmware installer version 3.0.1. I mentioned this in the same Apple Support community discussion I had already started and the same individual who commented previously pointed me to a discussion thread at MacGurus.com where somebody asked for version 3.0.1 as well, which I learned added support for the 7457 CPU upgrades. Bingo!

I responded to the thread asking if anybody would be able to provide me with the same firmware update and within 48 hours I received a personal email from one of the moderators with the update utility included as an attachment. Before I did anything with this version of the updater, I uploaded this piece of software to the Mac Driver Museum file repository for anybody else ever needing it in the future.

To run this particular upgrade, you can simply extract the files from the .sit archive and run the lone Giga Designs Updater 3.0.1 package. Here is a quick screenshot of the installer. As straightforward as can be, so I will not cover it step-by-step.

Unfortunately, and frustratingly, still no signs of life after booting up the machine with the Giga Designs CPU in place and freshly installed firmware update. However, I remembered reading a bit more from that same MacGurus thread about different voltage settings you could set on the CPU. Here is a table summarizing those different voltages and how to obtain them.

I thought perhaps the voltage was not set correctly and sure enough, the current setup I had for J6 - J10 was not in the table above. I decided to start with a voltage of 1.55 as 1.6 and 1.65 seemed a bit extreme for a noob like myself. This is where I realized and learned there are also dip switches for J1 - J5 that controlled the CPU's clock frequency. So this meant I would have to also play around with those as well to see which combination of the 5 would give me 1.4 GHz. After scouring the web for some time, I gave up the search for any sort of table showing possible combinations and resulting frequencies. If anybody reading this has this information available, please (I cannot say it enough) share!

Since I was not able to obtain this information, I decided to starting building a list of tables with this information myself. There are still many missing results and a lot of unanswered questions I have, but it is a worthy start. Knowing that each of the 5 dip switches had 2 possible states (either on or off) I could easily calculate the total number of possibilities as 2^5 or 32. Yes, that is basically binary numbers and mathematics at its finest. And with 7 different voltages to work with, I could theoretically have up to 224 possible combinations of states between J1 - J10 that could provide me with a working system and CPU. For now, I have only included my results for 1.55v, but eventually, I will link to a PDF showing results for all voltages along with notes regarding missing values, etc. I I have read that some frequency results may not be readable by OS X, but require an additional program called GigaMeter, which is available at the Mac Driver Museum as well, to show you what your CPU's clock speed is.

As you can see from the table above I have figured out what combination provides the full 1.4 GHz, but this was one of the results that required the GigaMeter KEXT to be installed otherwise the speed was reported as 0 MHz in OS X. On the other hand, Debian still reports 0 for clock speed, but neither OS has crashed on me yet so the stability is there. I will see if I can find anything online on to correct it within Linux. Some results produced kernel panics with both OS X and Debian and these are marked with an asterisk. Also marked with an asterisk are combinations that successfully produced a start-up chime but would not boot any further. And finally, as you probably have guessed, the blanks are combinations that produced nothing at all (i.e. no startup chime, boot up, etc).

Part of me wonders if I even needed the firmware upgrade for my QS, which was also mentioned by a moderator in the MacGuru's thread linked above. Either way, the CPU is still in place and running wonderfully at least on OS X Leopard. This conclusion will bring us to my next post in this series where I upgrade my Debian install to use a symmetric multiprocessing (SMP) kernel so it takes advantage of both CPUs for even better performance. Do not fret, this next post will be a hell of a lot shorter. :)

P.S. If you have any other additional info you can provide regarding the Giga Designs upgrades, feel free to leave comments below or shoot me a private email and I can add it to this post. I would love to make this process much simpler for those in the future. The Giga Designs company is now defunct, their website is gone, and the Way Back Machine could not help me locate the information I needed most as the necessary information used to be hosted in PDF form on their site.

Sunday, June 28, 2015

PPC Linux Backups: Part I

So I've again taken an opportunity to step away from the G4 upgrades project as I have again become frustrated, this time with trying to make the upgraded CPU work. More on that when the post is completed and published.

I decided it would be more than worth it to put together a three part series on backing up your PPC Linux machines. I myself use of combination of PowerPC machines, including a G4 PowerBook, G4 PowerMac QS, dual core G5 PowerMac, and a G4 iBook. Much of the configurations between the 4 are quite similar, but different enough that I'd like the peace of mind knowing that these configurations and other valuable data are backed up and backed up on a scheduled basis. Part I will cover creating bootable hard drive clones; Part II will cover available software that could automate backups to an external drive or a storage location out on the network; Part III will cover how to create more of a DIY solution using custom scripts and automation tools. I'm going to refrain from using any cloud/offsite backup solutions as they usually cost money and require my personal information.

So onto Part I covering the process involved in creating an exact bootable clone of your existing hard drive. We are going to use the well-known and trusted command line tool dd to complete this task. This is quite the powerful tool that at its basic core simply reads and writes files. With this in mind, this tool should allow us to either make a copy of the entire drive or a single partition if we would like.

As an example, I am going to be cloning my 40 GB Maxtor HD in the G4 PowerMac GS containing my Debian install. I'm going to clone the entire drive instead of just specific partitions, so in essence, it should be an exact bootable clone. I would like to use a drive of the same vendor and capacity, but I do not exactly have many spare 3.5" IDE drives laying around, so for this task I am going to clone the existing drive to a 40 GB Western Digital (WD400JB/WD Cavier SE) HD. The QS has the ability to mount two IDE drives, but I already have both occupied, so I turned to my IDE/SATA to USB 2.0 adapter kit to attach the backup drive.

Below is a screenshot of my current Debian disk and its existing partitions and partition layout. After the cloning operation, which should expect to see the exact same layout on the hard drive clone.

Once your external drive is attached and recognized by your system, you will want to figure out its file name in

Now it is time to run the disk cloning command:

As you will notice, this command requires root privileges. The 'if" part stands for input file and if you are catching on, "of" stands for output file. We are not reading or writing just files, but technically a drive itself is a file (e.g.

The amount of time it takes to clone the drive or partition depends on both the size and speed of the drive, but expect it to take quite a bit of time. For this 40 GB drive clone, it took between 4-5 hours total (I did not time it, although I should for comparisons in drive read/write performance when upgrading to an SSD).

Here are the results from my cloning process:

As you can see there are no error messages, but simply a warning that the destination file ran out of space. This is because my Debian system sees the Maxtor as having 41 GB and the Western Digital as having a 40 GB capacity. Either way, there is no harm in this case. Here is a screenshot of the Western Digital HD after the clone.

I shutdown my system and pulled the live Debian HD and replaced it with the cloned Western Digital HD to test out the backup. Here is my last screenshot of this post showing us with WD drive booted and located at /dev/sda.

So a definite success. Now that I have this cloning process down, I will do the same with the other PPC machines and schedule it to be done on a periodic basis.

Of course, you'll want to tag team this backup approach with another such as backing up with a piece of backup software or writing shell scripts to do the backups for you, which again happens to be part II and part III of this series respectively. As I learned early on in computing, a smart man backups up his data, but a wise man makes backups for his backups.

I decided it would be more than worth it to put together a three part series on backing up your PPC Linux machines. I myself use of combination of PowerPC machines, including a G4 PowerBook, G4 PowerMac QS, dual core G5 PowerMac, and a G4 iBook. Much of the configurations between the 4 are quite similar, but different enough that I'd like the peace of mind knowing that these configurations and other valuable data are backed up and backed up on a scheduled basis. Part I will cover creating bootable hard drive clones; Part II will cover available software that could automate backups to an external drive or a storage location out on the network; Part III will cover how to create more of a DIY solution using custom scripts and automation tools. I'm going to refrain from using any cloud/offsite backup solutions as they usually cost money and require my personal information.

So onto Part I covering the process involved in creating an exact bootable clone of your existing hard drive. We are going to use the well-known and trusted command line tool dd to complete this task. This is quite the powerful tool that at its basic core simply reads and writes files. With this in mind, this tool should allow us to either make a copy of the entire drive or a single partition if we would like.

As an example, I am going to be cloning my 40 GB Maxtor HD in the G4 PowerMac GS containing my Debian install. I'm going to clone the entire drive instead of just specific partitions, so in essence, it should be an exact bootable clone. I would like to use a drive of the same vendor and capacity, but I do not exactly have many spare 3.5" IDE drives laying around, so for this task I am going to clone the existing drive to a 40 GB Western Digital (WD400JB/WD Cavier SE) HD. The QS has the ability to mount two IDE drives, but I already have both occupied, so I turned to my IDE/SATA to USB 2.0 adapter kit to attach the backup drive.

Below is a screenshot of my current Debian disk and its existing partitions and partition layout. After the cloning operation, which should expect to see the exact same layout on the hard drive clone.

Once your external drive is attached and recognized by your system, you will want to figure out its file name in

/dev. From my reading of the Debian installation guide, all disks detected by the system start with sd (I'm assuming this stands for SCSI device or something similar?) and the first drive is detected as a, the second b, third c, and so on. So for my setup, the external drive happens to be the third attached disk, so its filename is /dev/sdc.Now it is time to run the disk cloning command:

sudo dd if=/dev/sda of=/dev/sdcAs you will notice, this command requires root privileges. The 'if" part stands for input file and if you are catching on, "of" stands for output file. We are not reading or writing just files, but technically a drive itself is a file (e.g.

/dev/sda) within the EXT4 file system. Hopefully that makes sense. Your input and output files will vary depending on your system setup. If you need help identifying this information or if you are just wanting to copy certain partitions, leave me a comment below.The amount of time it takes to clone the drive or partition depends on both the size and speed of the drive, but expect it to take quite a bit of time. For this 40 GB drive clone, it took between 4-5 hours total (I did not time it, although I should for comparisons in drive read/write performance when upgrading to an SSD).

Here are the results from my cloning process:

dd: writing to ‘/dev/sdc’: No space left on device78165361+0 records in78165360+0 records out40020664320 bytes (40 GB) copied, 8500.46 s, 4.7 MB/sAs you can see there are no error messages, but simply a warning that the destination file ran out of space. This is because my Debian system sees the Maxtor as having 41 GB and the Western Digital as having a 40 GB capacity. Either way, there is no harm in this case. Here is a screenshot of the Western Digital HD after the clone.

I shutdown my system and pulled the live Debian HD and replaced it with the cloned Western Digital HD to test out the backup. Here is my last screenshot of this post showing us with WD drive booted and located at /dev/sda.

So a definite success. Now that I have this cloning process down, I will do the same with the other PPC machines and schedule it to be done on a periodic basis.

Of course, you'll want to tag team this backup approach with another such as backing up with a piece of backup software or writing shell scripts to do the backups for you, which again happens to be part II and part III of this series respectively. As I learned early on in computing, a smart man backups up his data, but a wise man makes backups for his backups.

Friday, June 12, 2015

Updates, Aliases, and Network No-Nos

Long time no post (at least it feels like that). My work on the graphics card upgrade was moving along quite nicely, but I've hit a brick wall after compiling the latest nouveau drivers/modules, libdrm, and mesa libraries. On the G5, I simply get an error regarding a GPU lockup, and a botched graphics glitch on the G4 with the updated kernel. Because of that, I've decided two things: 1) I'm going to work on the CPU upgrade next on the G4 and 2) I'm going to table the nouveau drivers project for just a bit and go with a Radeon 9800 Pro for an upgraded graphics card.

Right now, I feel like I'll have a better chance of getting 3D acceleration working with the nVidia Quadro card on the G5, so look for updates on that over on the PowerPCLiberation blog.

On an unrelated note, I want to take a few minutes to discuss command aliases you can setup for your bash shell environment. I've been meaning to do this on my Debian installs for some time as I've set them up on all the Linux machines at my full-time job. Basically you can create aliases to make shortened versions of longer commands you run on a regular basis. For example instead of typing the following:

You can shorten this command to simply the word

So for the example I provided above, I would set it up like so:

Upon saving the changes, you should immediately be able to make use of the new alias. To test it out type

Here are just a couple of others I usually implement on each of my installs.

They may not do a whole lot, but the small amount of time these aliases can save does add up over time in the same way the utility kupfer does. I'd encourage you to come up with your own. Feel free to share them in the comments below as they may be helpful for the rest of us.

Also, if you happen to run a really long command and say forgot to add sudo to the beginning of it or need to move towards the beginning of the command to retype or add additional commands, simply hit

One last thing I've learned recently when working with Debian I thought might be helpful to share. If you are using the well-known NetworkManager, don't manually edit the

Oh yeah, and if you'd like to use the GUI wrapper for NetworkManager, simply type

So nothing too exciting. Just a few helpful tips for growing and expanding your Linux experience and expertise. Look for the CPU upgrade soon! I'm also running into issues with that as well, but I'm more hopeful I'll be able to resolve those quicker than the nouveau drivers. Not only that, the CPU is such a sloth, that an upgrade should help move the rest of my posts on the upgrades along much faster.

Right now, I feel like I'll have a better chance of getting 3D acceleration working with the nVidia Quadro card on the G5, so look for updates on that over on the PowerPCLiberation blog.

On an unrelated note, I want to take a few minutes to discuss command aliases you can setup for your bash shell environment. I've been meaning to do this on my Debian installs for some time as I've set them up on all the Linux machines at my full-time job. Basically you can create aliases to make shortened versions of longer commands you run on a regular basis. For example instead of typing the following:

sudo apt-get update && sudo apt-get upgradeYou can shorten this command to simply the word

update, which when ran will actually run the command above. To get started with aliases, you'll want to change directories into your home directory. Create/open a new file you'll call .bash_aliases. The syntax for setting up a new alias is as follows:alias [alias_name]='[full command you are aliasing]'So for the example I provided above, I would set it up like so:

alias update='sudo apt-get update && sudo apt-get upgradeUpon saving the changes, you should immediately be able to make use of the new alias. To test it out type

update and watch as this short one word command runs both the update and upgrade commands!Here are just a couple of others I usually implement on each of my installs.

alias cdh='cd /home/[username]'alias cde='cd /etc'alias cdv='cd /var'alias ls='ls -alh'They may not do a whole lot, but the small amount of time these aliases can save does add up over time in the same way the utility kupfer does. I'd encourage you to come up with your own. Feel free to share them in the comments below as they may be helpful for the rest of us.

Also, if you happen to run a really long command and say forgot to add sudo to the beginning of it or need to move towards the beginning of the command to retype or add additional commands, simply hit

Command + a (or Control + a if you haven't swapped out the Command and Control keys). This moves your cursor to the beginning of the command. I happen to use this quite a bit.One last thing I've learned recently when working with Debian I thought might be helpful to share. If you are using the well-known NetworkManager, don't manually edit the

/etc/network/interfaces file with network interface configurations or vice versa. The two separate configurations will conflict with each other and produce unusual, unpredictable, and unusable results. For some time now, I couldn't figure out why I couldn't just plug in an ethernet cable to any of my PPC machines and receive an IP via DHCP without restarting network services or rebooting with the cable already plugged into the machine. Such a simple concept, but it took me awhile to figure out.Oh yeah, and if you'd like to use the GUI wrapper for NetworkManager, simply type

nm-connection-editor. That right there might be an excellent candidate for creating an alias in order to shorten it up. Of course you can also tab to auto-complete the command, but the choice is yours.So nothing too exciting. Just a few helpful tips for growing and expanding your Linux experience and expertise. Look for the CPU upgrade soon! I'm also running into issues with that as well, but I'm more hopeful I'll be able to resolve those quicker than the nouveau drivers. Not only that, the CPU is such a sloth, that an upgrade should help move the rest of my posts on the upgrades along much faster.

Tuesday, May 12, 2015

Pimp My Quicksilver - Part III: CD/DVD Drive

Welcome to Part II of the Pimp my Quicksilver series where I will be upgrading the CD/DVD drive. Again, this particular component may not be the most exciting or even often used, but I felt it necessary to include.

Here is a System Profile screenshot with the old drive:

As you can see, the drive is not capable of reading or writing DVDs, but does support the reading and writing of CDs. This is the stock Superdrive that shipped with the Quicksilver (QS). Apple reported that this drive is capable of reading CDs at 32X while being able to write to CD-Rs at 24X, which is what I will be using for testing.

Here is a System Profile screenshot of the newly installed drive.

The new drive from MCE, which they had to custom build as it is now an older model, is capable of burnings CDs at 48X. So we should see a decent sized chunk of time cut out when burning files and ISOs to CD. Of course, this MCE drive also supports the burning of the different DVD media types including Dual Layer (DL) at up to 22X depending on the time of DVD media. Definitely a benefit over having no DVD reading or burning capabilities at all.

One last significant difference you may have noticed is the difference in the cache sizes between the two. The stock drive had an 8 MB cache whereas the replacement one only has a 512 KB cache. I'm not all too familiar with caches on CD/DVDs drives, but either way it shouldn't really hurt the CD burn tests I will be performing. I'm positive the cache comes more in to play with reading files and playback performance.

I plan on burning a copy of the latest version of NetBSD 6.5.1 to install on an old purple G3 iMac. In a previous post, I discussed the possibility of installing NetBSD (I might have even said FreeBSD) onto my PowerBook 1400CS only to read that it is not actually officially supported as it is one of the older Mac models with the NuBus architecture. Oh well. I still want to give NetBSD a shot, so that's why its going on the iMac.

The NetBSD ISO I downloaded from here, was 313920 KB (~307 MB if you were wondering) in size. Instead of burning it the boring old fashioned way in OS X using Disk Utility or the Burn application, I decided to burn the ISO to a CD from the CLI while booted into Jessie using the simple and easy to use command line tool

To burn an ISO using wodim, simply run the following:

Here are the results:

Stock CD Drive - 147.15s

Upgrade MCE Drive - 100.18s

So we have managed to shave off at least 32% of time when burning CDs. A welcomed improvement. Woohoo!

In other news, I have ordered both a 1.8 GHz Sonnet CPU and a dual 1.4 GHz Giga Designs CPU off of eBay. I wasn't expecting to win the 1.8 GHz, but received notification late Sunday morning that my bid was the highest. Again.. oh well. It will be nice to test out and compare with the Giga Designs CPU. Both are compatible with the QS although from what I've read the Sonnet CPU requires a specific firmware patch from Sonnet that is no longer available on their website. :(

Stay tuned for Part IV covering the CPU upgrade from a single 800 GHz CPU to a dual 1.4 GHz CPU. Things should start to become quite interesting from here on out.

Here is a System Profile screenshot with the old drive:

As you can see, the drive is not capable of reading or writing DVDs, but does support the reading and writing of CDs. This is the stock Superdrive that shipped with the Quicksilver (QS). Apple reported that this drive is capable of reading CDs at 32X while being able to write to CD-Rs at 24X, which is what I will be using for testing.

Here is a System Profile screenshot of the newly installed drive.

The new drive from MCE, which they had to custom build as it is now an older model, is capable of burnings CDs at 48X. So we should see a decent sized chunk of time cut out when burning files and ISOs to CD. Of course, this MCE drive also supports the burning of the different DVD media types including Dual Layer (DL) at up to 22X depending on the time of DVD media. Definitely a benefit over having no DVD reading or burning capabilities at all.

One last significant difference you may have noticed is the difference in the cache sizes between the two. The stock drive had an 8 MB cache whereas the replacement one only has a 512 KB cache. I'm not all too familiar with caches on CD/DVDs drives, but either way it shouldn't really hurt the CD burn tests I will be performing. I'm positive the cache comes more in to play with reading files and playback performance.

I plan on burning a copy of the latest version of NetBSD 6.5.1 to install on an old purple G3 iMac. In a previous post, I discussed the possibility of installing NetBSD (I might have even said FreeBSD) onto my PowerBook 1400CS only to read that it is not actually officially supported as it is one of the older Mac models with the NuBus architecture. Oh well. I still want to give NetBSD a shot, so that's why its going on the iMac.

The NetBSD ISO I downloaded from here, was 313920 KB (~307 MB if you were wondering) in size. Instead of burning it the boring old fashioned way in OS X using Disk Utility or the Burn application, I decided to burn the ISO to a CD from the CLI while booted into Jessie using the simple and easy to use command line tool

wodim. This Debian Wiki page has some perfect documentation on how to use wodim and what other CD burning applications are available, but man pages are an excellent resource as well. For a measurement of performance, I will record the time it takes to burn the ISO once using each drive and report the results, which again, should speak for themselves and be quite self-explanatory. For the media type, I am utilizing a 700 MB Memorex CD-R.To burn an ISO using wodim, simply run the following:

wodim -dao [InsertFileNameHere].isoHere are the results:

Stock CD Drive - 147.15s

Upgrade MCE Drive - 100.18s

So we have managed to shave off at least 32% of time when burning CDs. A welcomed improvement. Woohoo!

In other news, I have ordered both a 1.8 GHz Sonnet CPU and a dual 1.4 GHz Giga Designs CPU off of eBay. I wasn't expecting to win the 1.8 GHz, but received notification late Sunday morning that my bid was the highest. Again.. oh well. It will be nice to test out and compare with the Giga Designs CPU. Both are compatible with the QS although from what I've read the Sonnet CPU requires a specific firmware patch from Sonnet that is no longer available on their website. :(

Stay tuned for Part IV covering the CPU upgrade from a single 800 GHz CPU to a dual 1.4 GHz CPU. Things should start to become quite interesting from here on out.

Sunday, May 10, 2015

Pimp My QuickSilver - Part II: RAM

Welcome to Part II of my series on upgrading a G4 Quicksilver (QS) to its maximum capabilities. See Part I here. So a few of the hardware upgrades arrived including the 1.5 GB of RAM, SuperDrive, and nVidia 7800 GS graphics card. Before I could really begin this adventure, I wanted to reload the Quicksilver in its current condition with fresh OS installs with a little bit of customization done for each. That's where the battle began.

First, both 200 GB WD IDE drives failed to be recognized by any installer or currently running system of mine. When they would be recognized, it caused all sorts of modprobe errors and slowed the installer considerably, especially when the installer reached the disk partitioning step (of course). I found two matching 40 GB Quantam Fireball 3 drives that did work though and successfully swapped them out after zeroing out both disks using Disk Utility in OS X. Immediately after that, I went to start the install of Debian, but quickly learned that my install CD was not being read properly. Turns out it wasn't the CD, but the actual CD/DVD drive itself. I pulled the working one from my identical Quicksilver to quickly resolve that issue.

Not only that, but starting the OS X installer off of my Leopard DVD had to be done in Open Firmware (OF) off of an external DVD drive. Here is the command I needed to run with the DVD drive plugged into the rightside on-board USB 1.1 port. The left would be

If there were multiple partitions on the install DVD, I'd include the partition number the installer was located on after the colon in the above command.

The first 40 GB Fireball HD will house OS X Leopard and the second was going to be home to Debian Wheezy running LXDE. I chose Leopard because it was my first real experience with Mac OS X and I've never been able to grow very fond of OS X 10.4 Tiger. The caveat being that the CPU in my QS only ran at 800 MHz so I had to use the OF hack to fool the installer into thinking the CPU was running at 867 MHz, which happens to be minimum requirement for Leopard. Of course, the change does not actually increase the clock frequency of the CPU, but changes what frequency it advertises itself as running at. The change to the CPU clock speed advertisement within OF is only good until the system is rebooted. More information on how to do this can be found here.

Eventually, I also decided to just upgrade to Jessie for the Debian install. As expected there were video issues as the G4 currently houses an ATI Radeon card. I had to boot to rescue mode and install the linux-firmware-nonfree package before video would work. I have yet to add any additional sabot parameters to assist related to the Radeon card, but haven't really found the need to either. Time will tell.

So all of that and more caused quite a bit of delay in getting this put together. Was it worth it and perhaps a little bit of fun? You betcha. But I now bring you the results of upgrading and maxing out the system's RAM.

As mentioned in Part I, the system started with 1 GB of RAM and I've upgraded it to 1.5 GB (it is maximum memory capacity). I ran a series of tests to help gather some hopefully more realistic day-to-day numbers you would see from increasing the amount of available RAM. I've also ran GeekBench against the system in OS X as well just for the heck of it to provide you with those results as well.

The tests include the following:

That aside, let us move on to the memory install. I don't think there really is a need to cover much here as the process to upgrade the RAM is about as straightforward as you could make it. In fact it is so easy that it's not even a documented procedure for the QS over at iFixit's website. The QS has 3 slots for RAM, meaning each slot can house a 3.3 volt 168-pin 512 MB stick of PC 133 SDRAM. The 133 indicating the speed of the RAM in Megahertz. Not knowing enough about SDRAM in particular I took to the ever faithful Wikipedia to learn more about it. Feel free to do the same. I happened to purchase 3 sticks myself from OWC. I wanted to have a complete set of brand new RAM to run off of.

This particular RAM doesn't include heat spreaders, but I'll live with that for now at least. I should be able to purchase those at a later time. My question is does it really add that much benefit for this type of RAM? Or would it be more for form rather than function? I'd be interested in hearing your thoughts.

I swapped out the RAM and filled all 3 slots, closed 'er up and watched it boot. Here are my resulting numbers from each of the previously documented tests above.

Most of the numbers you see above should be pretty self-explanatory. For the boot times, I noticed that booting to OS X after using Jessie or vice versa took about 15-20 seconds longer, so for each of the tests above, I made sure to start the boot up after having last used the OS I was currently testing. My guess is that the last used startup disk is cached by OF somehow. The other significant difference for the boot times between Leopard and Jessie are that Jessie has to go through the necessary yaboot parameters to address Radeon driver issues which adds a good chuck more of time when booting up.

I want to quick note that it took forever to come up with the results of the maximum number of open Twitter tabs as the CPU became overworked after opening the second tab in both operating systems. :) However, once Twitter loaded on each tab, the browser's responsiveness and stability would return. Here is a screenshot of Activity Monitor when I hit 43 Twitter tabs in OS X with 1.5 GB of RAM.

The only other thing worth noting at this point is that the Geekbench score did not change. In fact, the numbers were exactly the same through and through. Makes me a little suspicious, but I"m not familiar enough with the application to really argue anything at this point.

That's it for this segment. Not super exciting given that the system only maxes out at 1.5 GB of RAM, the upgrade is super simple, and the rest of the system is still quite the bottleneck at this point. Part III will tackle the CD/DVD drive upgrade. Stay tuned.

First, both 200 GB WD IDE drives failed to be recognized by any installer or currently running system of mine. When they would be recognized, it caused all sorts of modprobe errors and slowed the installer considerably, especially when the installer reached the disk partitioning step (of course). I found two matching 40 GB Quantam Fireball 3 drives that did work though and successfully swapped them out after zeroing out both disks using Disk Utility in OS X. Immediately after that, I went to start the install of Debian, but quickly learned that my install CD was not being read properly. Turns out it wasn't the CD, but the actual CD/DVD drive itself. I pulled the working one from my identical Quicksilver to quickly resolve that issue.

Not only that, but starting the OS X installer off of my Leopard DVD had to be done in Open Firmware (OF) off of an external DVD drive. Here is the command I needed to run with the DVD drive plugged into the rightside on-board USB 1.1 port. The left would be

usb1.boot usb0/disk@1:,\System\Library\CoreService\BootXIf there were multiple partitions on the install DVD, I'd include the partition number the installer was located on after the colon in the above command.

The first 40 GB Fireball HD will house OS X Leopard and the second was going to be home to Debian Wheezy running LXDE. I chose Leopard because it was my first real experience with Mac OS X and I've never been able to grow very fond of OS X 10.4 Tiger. The caveat being that the CPU in my QS only ran at 800 MHz so I had to use the OF hack to fool the installer into thinking the CPU was running at 867 MHz, which happens to be minimum requirement for Leopard. Of course, the change does not actually increase the clock frequency of the CPU, but changes what frequency it advertises itself as running at. The change to the CPU clock speed advertisement within OF is only good until the system is rebooted. More information on how to do this can be found here.

Eventually, I also decided to just upgrade to Jessie for the Debian install. As expected there were video issues as the G4 currently houses an ATI Radeon card. I had to boot to rescue mode and install the linux-firmware-nonfree package before video would work. I have yet to add any additional sabot parameters to assist related to the Radeon card, but haven't really found the need to either. Time will tell.

So all of that and more caused quite a bit of delay in getting this put together. Was it worth it and perhaps a little bit of fun? You betcha. But I now bring you the results of upgrading and maxing out the system's RAM.

As mentioned in Part I, the system started with 1 GB of RAM and I've upgraded it to 1.5 GB (it is maximum memory capacity). I ran a series of tests to help gather some hopefully more realistic day-to-day numbers you would see from increasing the amount of available RAM. I've also ran GeekBench against the system in OS X as well just for the heck of it to provide you with those results as well.

The tests include the following:

- Boot Time - probably not going to be affected a whole lot, but it should be nice to have these numbers available throughout the entire series. By boot time I mean the time it takes for the system to boot to the login screen with and have the first typed character appear.

- Browser Launch Time - No add-ons installed running TenFourFox and IceWeasel 31.6.0. I thought about installing uBlock and XMarks for syncing bookmarks but decided to keep it simple this time around. I'm measuring the amount of time it takes to click the browser icon and type the first letter in the Google search bar on the default home page.

- Maximum # of Browser Tabs - This one should be most interesting for my use. I decided to use Twitter as it is an incredibly memory hungry website with each tab using anywhere from roughly 20 - 40 MB of memory apiece. I continued to open new tabs and loading Twitter until the system was starved of memory and started to swap to the hard disk. I was NOT signed in during my testing.

- GeekBench Results - Ran on the OS X side only as mentioned previously. I'm using version 2.2.7, the latest version available for our PPC machines. You can download it here.

- Other Suggestions - If you have any suggestions, I will gladly run those as well and update this post with those numbers. I'm positive their are better tests to run than what I've outlined above.

top. I wanted to use conky, but many of the characters appear garbled. Not sure if it's a font issue or perhaps a screen depth issue? Reloading conky after logging in helps clear up some of the garbled mess, but not all of it.That aside, let us move on to the memory install. I don't think there really is a need to cover much here as the process to upgrade the RAM is about as straightforward as you could make it. In fact it is so easy that it's not even a documented procedure for the QS over at iFixit's website. The QS has 3 slots for RAM, meaning each slot can house a 3.3 volt 168-pin 512 MB stick of PC 133 SDRAM. The 133 indicating the speed of the RAM in Megahertz. Not knowing enough about SDRAM in particular I took to the ever faithful Wikipedia to learn more about it. Feel free to do the same. I happened to purchase 3 sticks myself from OWC. I wanted to have a complete set of brand new RAM to run off of.

This particular RAM doesn't include heat spreaders, but I'll live with that for now at least. I should be able to purchase those at a later time. My question is does it really add that much benefit for this type of RAM? Or would it be more for form rather than function? I'd be interested in hearing your thoughts.

I swapped out the RAM and filled all 3 slots, closed 'er up and watched it boot. Here are my resulting numbers from each of the previously documented tests above.

| Measured Task - Jessie (8.0) | 1.0 GB RAM | 1.5 GB RAM |

| Boot Time (in seconds) | (88.22,83.57,83.64) AVG=85.14 | (87.16,83.89,83.92) AVG=84.99 |

| Browser Launch (in seconds) | (7.82,8.31,8.08) AVG=8.07 | (7.78,9.23,8.76) AVG=8.59 |

| Number of Open Tabs | 21 | 43 |

| Measured Task - OS X Leopard | 1.0 GB RAM | 1.5 GB RAM |

| Boot Time (in seconds) | (48.99,53.85,48.43) AVG=50.42 | (53.67,51.70,56.07) AVG=53.81 |

| Browser Launch (in seconds) | (8.51,8.63,8.98) AVG=8.71 | (9.28,8.80,9.06) AVG=9.05 |

| Number of Open Tabs | 24 | 43 |

| GeekBench Score (OS X) | 473 (Memory: 323) | 473 (Memory: 323) |

Most of the numbers you see above should be pretty self-explanatory. For the boot times, I noticed that booting to OS X after using Jessie or vice versa took about 15-20 seconds longer, so for each of the tests above, I made sure to start the boot up after having last used the OS I was currently testing. My guess is that the last used startup disk is cached by OF somehow. The other significant difference for the boot times between Leopard and Jessie are that Jessie has to go through the necessary yaboot parameters to address Radeon driver issues which adds a good chuck more of time when booting up.

I want to quick note that it took forever to come up with the results of the maximum number of open Twitter tabs as the CPU became overworked after opening the second tab in both operating systems. :) However, once Twitter loaded on each tab, the browser's responsiveness and stability would return. Here is a screenshot of Activity Monitor when I hit 43 Twitter tabs in OS X with 1.5 GB of RAM.

The only other thing worth noting at this point is that the Geekbench score did not change. In fact, the numbers were exactly the same through and through. Makes me a little suspicious, but I"m not familiar enough with the application to really argue anything at this point.

That's it for this segment. Not super exciting given that the system only maxes out at 1.5 GB of RAM, the upgrade is super simple, and the rest of the system is still quite the bottleneck at this point. Part III will tackle the CD/DVD drive upgrade. Stay tuned.

Labels:

built RAM tough,

Debian PPC,

Leopard,

ppc,

RAM,

upgrade

Wednesday, April 22, 2015

An Applet a Day Keeps the Doctor Away

I'm subscribed to the Debian PPC mailing list (if you aren't yet, you definitely should be!) and recently read about a "new" Gnome (both a gtk2 and 3 version) battery menu applet for our PowerPC portables as well as a version of it that was ported to C.

Change directories into the unzipped folder and run:

This should copy the Linux PPC executable to your

Hovering your mouse over the icon brings up a small pop-up of sorts that gives you the remaining estimated battery capacity and number of minutes of runtime until the battery is depleted.

Of course, you don't want to manually run this every time you reboot or power on your PPC laptop, so go ahead and add it to the necessary startup configuration for your desktop environment. For LXDE, I added the line

There are numerous battery icon files for showing the percentages of remaining capacity and they exist for both when the laptop is on battery power and when it is charging. When the laptop is plugged in and charging a small + symbol appears on the upper right hand side. A function called

You can read more about the applet here and the C code can be downloaded from Github here. The version written in C is actually relatively straightforward code to read through. As another user mentioned, it is kind of cool to see how a menu applet can actually be written and works.

Once you've downloaded the zip file, unpack it by running the following command inside the folder where you downloaded the zip, such as your user profile's

Downloads folder.unzip mac-battery-applet-master-master.zipChange directories into the unzipped folder and run:

make installThis should copy the Linux PPC executable to your

/usr/local/bin folder so go ahead and type mac- and it should auto-complete the command to mac-battery-applet for you. Hit enter and you should see a small battery icon appear in your task bar/panel as can be seen from the screen shot below.Hovering your mouse over the icon brings up a small pop-up of sorts that gives you the remaining estimated battery capacity and number of minutes of runtime until the battery is depleted.

Of course, you don't want to manually run this every time you reboot or power on your PPC laptop, so go ahead and add it to the necessary startup configuration for your desktop environment. For LXDE, I added the line

@mac-battery-applet to my autostart file located at /etc/xdg/lxsession/LXDE.There are numerous battery icon files for showing the percentages of remaining capacity and they exist for both when the laptop is on battery power and when it is charging. When the laptop is plugged in and charging a small + symbol appears on the upper right hand side. A function called

build_icon_file_name creates the necessary filename and it uses the code in files.c to construct the path for the corresponding file for that % of battery based on the built filename. There is a lot more to it all, but pretty cool I think overall. Some might prefer to have more granular results, but I think the 10% increment icons are an okay trade-off. Ideally, I might do 5% increments, but that could become quite tedious when creating the icon files.

Reading through the README, it says there is currently no Debian package for this applet, but the author states he would be more than willing to let someone else tackle that project. I've personally never done this, but perhaps it would be worth it to finally use it as a chance to learn all that does go into building a Debian package if it is even that much? I've heard arguments on both sides.

Anyways, there is much more valuable information in the links I provided, including possible future updates to the applet, such as custom icon themes, so I encourage you to check those out. Did I mention this was done on a recently purchased 1.42 GHz G4 iBook? Yeah, I am stoked. I'm almost done with the memory upgrade and tests on the G4 QS. Look for it in the next couple of days.

Anyways, there is much more valuable information in the links I provided, including possible future updates to the applet, such as custom icon themes, so I encourage you to check those out. Did I mention this was done on a recently purchased 1.42 GHz G4 iBook? Yeah, I am stoked. I'm almost done with the memory upgrade and tests on the G4 QS. Look for it in the next couple of days.

Wednesday, April 1, 2015

Thoughts on the Filesystem's Future

If you don't mind too much, I'd like to sort of run astray from my usual PPC related content here and discuss my thoughts about the future of the filesystem. Roughly 99% of it is entirely my opinion so take everything with what some call a "grain of salt."

I've recently read a couple of articles about the subject of the filesystem that covers arguments about both its impending doom and its forever omnipotence in computing devices. Since then I've wanted to add in my own thoughts on the matter. For some reason, I am currently unable to access the article I am referring to, but will check back periodically to try and post a link. The articles are not so much discussing the actual end of files and the filesystem, but the idea of its access being stripped or abstracted away from a typical user. Much of what you'll read below covers both such scenarios, so hopefully it's as clear as mud.

You could say the topic is still somewhat related to my blog content as of course our PPC system's are computing devices and would likely be affected by any changes that may or may not happen to the filesystem going forward. I'd like to argue that the filesystem is a construct which is here to stay for a very considerable part of the foreseeable future.

Let me provide a few of my arguments that will support my earlier outlined stance on the topic. Personally, I believe, overall, the filesystem has a long life ahead of it as it is currently such an integral part of a typical computing device whether that be a desktop, mobile phone, wearable devices... the list could go on and on.

Many define the filesystem as the construct allowing the control of the storage and retrieval of data, which is true, but its so much more than that. Some would also state, the filesystem is a lower level construct, probably even more so these days with mobile device operating systems (Android, iOS), which have abstracted away the idea of files and file organization in one sense, but more on that later. Regardless of whether an end user knows it or not, the filesystem is an important piece in the operation of the device in so many different ways.

Now I'm well aware that so much more is happening between the thin layer that is the filesystem and the actual hardware, but it is a good portion of the glue mixture that brings everything together in the end to make the device useful. I might even go so far as to say it is the very foundation and fabric of any virtual existence.

I often create analogies of computer related concepts of which correlate to that of the human body or a vehicle as it often helps paint a more clear picture for myself and others of what the subject's function is and how it works. So bare with me here. Files are to the computing system as DNA is to the human body. They contain a lot of the logical computational makeup (not the physical makeup obviously) of the device helping to shape what it does and again how it works. The analogy isn't a perfect fit, but I think it does the job well. Basically, just know files are incredibly crucial at this point to the operations of a device.

My second argument is that the filesystem itself is still rapidly evolving to this very day. Think of the numerous types of filesystems in existence such as FAT32, OS X Extended (and extended journaled), NTFS, EXT4, ZFS, CIFS, etc and the differences and similarities between each. Some have been around for ages, but there are always newer versions or replacements being introduced that build on or improve the ideas of those that came before it. Overall, there are still many weaknesses to be resolved and strengths to be improved upon. I could write on and on about the complexities of such filesystems and what they are or are not capable of or what could be done to improve them.

Unfortunately, a lot of the knowledge and understanding of files and the filesystem has always been an incredibly difficult one for non-technical individuals to comprehend and make use of, so a lot of them could care less about its existence. Hence the reason for it slowly but surely being phased out on some platforms. To them the filesystem is another layer of complexity they would rather NOT have to care about in the first place. All these end users want to know is that their files are 1) there (and by "there" I mean on the desktop usually), 2) safe, and 3) (for some more tech-savvy) secure.

As a network administrator ("IT guy") it is a bit frustrating trying to teach others to grasp such a concept as for me it is incredibly straightforward, simple, and analogous to the real physical things in the physical world. I'm sure all of us have heard of the idea of thinking of the filesystem as being quite similar to an actual filing cabinet. So one part of me is partially glad that its disappearing as it is less overhead for me when working with end users, but at the same time, I do not want to lose the vast amount of power that comes with having unlimited access to the filesystem. Then again, will it be less overheard for somebody who does IT work/development or will it actually increase? Either way, I believe its going to further devolve the computer abilities of the masses in many ways and likely decrease the already thin patience of an end user when something does go wrong in relation to their files.

Let me be very clear and state that I'm not in any way against the possibility something could eventually replace the filesystem as in time all things must live and die. As all tech-savvy people know, this is most definitely true in the technology world and is so at a much more rapid pace than many other areas in life. The difficult part is trying to imagine or conjure up any sort of idea of what would take its place as the application and use of the filesystem has been strongly cemented into my understanding of computational devices and how they work. As a coder or developer, I would feel even more strongly of this as everything I code comes together across multiple files both of my own and of the system I write code on to create a lot of the "magic" we take for granted today. I'm being incredibly vague, but again I'm trying to paint a clear picture here.

Part of me wonders if somehow the idea or even the files themselves will be somehow virtualized in some sense beyond that of which they currently are and can be today. I'm talking at a much lower level than of which is available to us today through actual files (of course they are not physical) and virtual hard disks using technologies such as VMware, XenApp, Linux KVM, or Hyper-V. Beyond that, my mind simply draws a dark empty blank void of which nothing can fill.